#

1D Code

A type of barcode that encodes information in a linear arrangement of parallel lines. These codes are read using a single scan line, typically with a laser or image-based scanner. The data gets decoded by measuring the variations in line thickness and spacing.

1D Imaging

Involves capturing and analysing data in a single dimension. In the context of machine vision, this typically means collecting data along a single line or axis. This is often used for tasks like barcode scanning, where a laser or camera captures information in a linear format.

2D Code

A 2D code consists of a pattern encoding information in two dimensions using a matrix of square or rectangular patterns, dots, or geometric shapes.

2D Code

A 2D code consists of a pattern encoding information in two dimensions using a matrix of square or rectangular patterns, dots, or geometric shapes.

2D codes feature a more intricate layout compared to 1D codes, requiring more advanced technology for acquisition and decoding. To read 2D codes, scanners must capture and interpret the entire pattern depicted by the barcode, rather than just a single line.

2D codes can store data both horizontally and vertically, allowing them to store more information in a smaller space. Some examples of 2D codes include QR codes and DataMatrix codes.

2D Imaging

Involves capturing and analysing data in two dimensions, typically representing an area or plane. This is more akin to traditional photography or video, where an image or a frame of video is captured in a rectangular format, containing both width and height information.

For some examples on what 2D sensors can do, click here.

3D Imaging

Refers to the technology and processes used to capture, process, and analyse three-dimensional information from a scene or object. Unlike traditional 2D imaging, which produces flat images, 3D imaging systems provide depth information, allowing machines to perceive and understand the spatial characteristics of objects in a more comprehensive manner.

To explore what you can achieve with 3D sensors, click here.

Aa

Aberration

The deviation or distortion of light rays as they pass through an optical system, such as a lens or a mirror. In a machine vision context, it pertains to the impact on image quality and accuracy.

Absorption

The process where the light hitting a surface is absorbed, preventing its reflection or transmission. This can be noticed on light-coloured opaque plastics and should be considered when choosing lighting for your application.

Algorithm

A set of instructions and calculations used by computers to achieve objectives. In factory automation, algorithms analyse data from sensors, scanners, and cameras to identify inefficiencies and suggest improvements.

Alphanumeric Code

A type of code combining numbers, letters, symbols and punctuation to encode product information. The sequence of these characters conveys specific information, making it possible to represent structured data. This form of encoding is commonly used in barcode systems.

Ambient Light

Ambient light refers to all light from sources other than the light source integrated into the machine vision system, such as sunlight or interior lighting fixtures.

AOI

Automated Optical Inspection, using optical imaging. AOI systems rely on cameras and image processing algorithms to analyse various visual characteristics of an object.

Aperture

Refers to an adjustable opening within an optical system, such as a camera or lens, that controls the amount of light entering the system.

Aperture

Refers to an adjustable opening within an optical system, such as a camera or lens, that controls the amount of light entering the system.

It plays a crucial role in regulating the exposure of an image sensor to incoming light. By adjusting the size of the aperture, typically measured in f-stops, the operator can influence the depth of field and control the amount of light reaching the sensor.

A larger aperture (lower f-stop number) allows more light to pass through and results in a shallower depth of field, while a smaller aperture (higher f-stop number) reduces light and increases depth of field. This is why TPL Vision manufacture lighting with OverDrive strobe to increase the brightness of the illumination.

Artificial Intelligence (AI)

The capability of a digital computer or computer-controlled robot to execute tasks typically associated with human decision-making.

In factory automation, AI algorithms utilize technologies like image recognition and natural language processing to reduce human error and anticipate production challenges.

Aspect Ratio

The aspect ratio of an image is determined by dividing its displayed width by its height, typically represented as „x:y“.

For example, a 1.2mp camera with 4:3 aspect ratio will have 1280 x 960 pixels on the sensor.

Bb

Backlighting

Placement of a light source behind an object being imaged or inspected.

This illumination method is commonly used to create a high-contrast silhouette of the object’s edges. Unlike bright field lighting, backlighting illuminates the object from the opposite side of the camera, highlighting surface defects, imperfections, or features that might be difficult to illuminate using other lighting methods.

See our range of backlights here.

Bandpass Filter

Optical device used to transmit a specific range of wavelengths while blocking other wavelengths.

Bandpass filters allow only a narrow band of light to pass through, enabling the camera to capture images with enhanced contrast and visibility of certain features. These filters are valuable in applications where specific colours or wavelengths of light need to be isolated for precise inspection, object recognition, or image analysis tasks.

Barcode

A type of image that encodes data into a graphical representation that can be read and interpreted by barcode scanners or machine vision cameras.

Bayer Conversion

The process of transforming Bayer colour data, acquired from a Bayer matrix or colour filter array, into RGB colour representation.

Bar Lighting

Also referred to as linear lighting, is a lighting technique that involves using a linear array of light sources that provide directional illumination.

Browse our range of bar lights here.

BGA

Ball-Grid Array, a type of surface-mount packaging used for integrated circuits.

Big Data

Technologies that consolidate vast data sets from multiple sources and use analytics tools to generate unique insights. Artificial Intelligence relies on big data to emulate human decision-making and produce accurate forecasts.

Bit Depth

The number of bits used to represent the colour or greyscale information of each pixel in an image. It determines the range of colours or shades of grey that can be captured and displayed by the imaging system.

- For greyscale images, bit depth indicates the number of different grey levels that can be represented. For example, an 8-bit greyscale image has 2^8 (256) possible shades of grey, ranging from black (0) to white (255).

- In the case of colour images, bit depth is typically assigned to each colour channel (e.g., red, green, and blue) individually. For instance, an 8-bit colour image has 256 levels of intensity for each colour channel, resulting in a total of over 16 million possible colours (256^3).

Bright Field Lighting

Refers to a lighting setup in which the light source is positioned to illuminate the object being examined from the same side as the camera or imaging sensor.

Bright field lighting is commonly used to capture detailed surface characteristics and colours. It is most effective when applied to objects with distinct surface features, well-defined colours, and uncomplicated shapes.

Cc

Camera Triggering

The process of externally controlling when a camera captures an image, often synchronized with external events, like the movement of an object on a conveyor belt.

Charge-Coupled Device (CCD)

CCD (Charge-Coupled Device) and CMOS (Complementary Metal-Oxide-Semiconductor) are two different types of image sensors used in digital cameras, including those used in machine vision systems.

Charge-Coupled Device (CCD) is an older and more traditional technology for capturing digital images. CCD sensors generally provide higher image quality, better dynamic range, and lower noise levels, especially in low-light conditions. However, they are more expensive to manufacture and consume more power.

CMOS

CMOS (Complementary Metal-Oxide-Semiconductor) and CCD (Charge-Coupled Device) are two different types of image sensors used in digital cameras, including those used in machine vision systems.

Complementary Metal-Oxide-Semiconductor (CMOS) image sensors are more recent and have become increasingly popular. CMOS sensors use a different manufacturing process that allows each pixel to have its own individual amplifier, making it easier to integrate other circuitry on the same chip. CMOS sensors are generally more power-efficient and cost-effective to produce, which has contributed to their widespread adoption in consumer electronics and industrial applications.

C-mount

Interchangeable lenses that use a standard C-mount thread to attach to machine vision cameras. C-mount lenses allow users to easily switch between different focal lengths and optical configurations to adapt to various imaging requirements and working distances. The C-mount features a 1-inch diameter threaded mount with a pitch of 32 threads per inch (1″-32UN).

Collimated Light

Light that is composed of parallel rays, with minimal divergence or convergence. This type of light is emitted in a way that its rays are nearly parallel and do not significantly spread out as they travel, making it perfect for precise measurement or feature detection applications.

This type of light would be paired with a camera and a telecentric lens.

Colour Temperature

A characteristic of white lighting sources referring to the colour appearance of the light they emit, typically measured in Kelvin (K).

Continuous Working

A lighting configuration that provides consistent and uninterrupted illumination for extended periods.

Contrast

The variation in pixel intensity or colour between different regions or objects within an image. High contrast indicates distinct differences in pixel values, making it easier for machine vision systems to detect and analyse objects accurately.

CS-mount

See C-mount, except the focal point is 5mm shorter. The shorter distance allows the camera sensor to be closer to the lens, making it more suitable for use with smaller image sensors.

Dd

Daisy-Chain

An operating mode whereby multiple devices or components, such as cameras, lighting sources, or sensors, are linked together in a sequential manner using a single communication line. Each unit is linked to the preceding and succeeding units through a single cable, which allows for the centralised control of all units from a single control point. In applications requiring synchronized illumination, daisy chaining ensures that all lighting units receive control signals at the same time.

Dark Field Lighting

Lighting technique that highlights surface irregularities or defects by directing light at a very low angle (relative to the camera’s optical axis), causing the features to stand out distinctly against a uniform, dark background.

This technique is ideal for highlighting scratches, particles, or surface irregularities on shiny or transparent objects.

Browse our range of low angle lights here.

Data Matrix

A two-dimensional barcode that encodes information in the form of black and white squares arranged in a grid pattern. It is used to store and retrieve data such as product information, serial numbers, and other data sets in a compact format. It normally has a simpler design than a QR code.

Deep Learning

An Artificial Intelligence (AI) technology designed to automate complex and customized applications. A subset of Machine Learning.

Processing takes place via a graphics processing unit (GPU), which enables users to build sophisticated neural networks from large, detailed image sets. Leveraging these neural networks, deep learning can effectively analyse vast image sets and detect subtle, variable defects to differentiate between acceptable and unacceptable anomalies.

Diaphragm

Refers to the adjustable aperture mechanism within a lens that controls the amount of light entering the camera sensor. It is used to adjust the size of the lens opening, known as the aperture, which directly affects the depth of field and the amount of light reaching the sensor.

Diffuse Lighting

Illumination that provides even, non-directional light to minimize shadows and highlights.

Disturbance

Unwanted or unintended variation, interference, or change in the imaging environment that can negatively impact the image evaluation.

Dome Lighting

Often referred to as “cloudy day illumination”, dome lighting is a popular lighting technique in machine vision due to the diffuse and evenly distributed illumination it produces. Dome lights come in two forms, either a flat-type with a camera hole in the centre for illuminating large areas, or those with the “traditional” round dome-shaped enclosure containing LEDs illuminating an internal reflective surface.

Commonly used for inspecting shiny or curved surfaces, dome lighting can help minimize shadows, reduce reflections, and enhance surface details.

Find our range of dome and flat dome lights here.

Dot Peen Marking

A type of marking procedure that entails using a stylus or pin to produce a sequence of small, closely spaced dots, forming a data matrix code on the material’s surface. This method is commonly employed in industrial applications for permanent labelling, identification, and traceability of various objects, such as metal, plastic, and other hard materials.

Duty Cycle

Refers to the ratio of the time a device or component is active or ON to the total time of a complete cycle. In illumination, it relates to the percentage of time that a lighting source is emitting light during an operational cycle.

When operating a light in OverDrive mode, the specified maximum duty cycle for the switching of the light on and off must be respected to avoid damaging the product.

Dynamic Range

Refers to the camera’s ability to capture a wide range of brightness levels in an image, from the darkest shadows to the brightest highlights.

Ee

Edge Learning

A subset of Machine Learning. Processing takes place on-device, or “at the edge,” using a pre-trained set of algorithms. This technology is simple to calibrate, requiring smaller image sets (as few as 5 to 10 images) and shorter training periods than traditional Deep Learning-based solutions.

Exposure Time

The duration for which the camera’s image sensor is exposed to light while capturing an image.

A longer exposure time allows more light into the sensor but can lead to blurry images.

Ff

Feature Extraction

The process of identifying and isolating specific patterns, shapes, or characteristics from raw image data.

Feature extraction seeks to capture relevant information while discarding irrelevant or redundant details. This process is akin to how the human visual system focuses on essential aspects of an image while ignoring background noise or irrelevant elements. Feature extraction algorithms work to highlight distinctive aspects of the image, such as edges, corners, textures, shapes, or colour variations, which can hold vital information for subsequent analysis.

Feature Matching

A process in which algorithms identify and match specific features or patterns in images to determine correspondences between different views or scenes. This is often used in robotics, navigation, and augmented reality.

Feedback Loop

The iterative cycle where machine learning algorithms and models continually learn and enhance their performance.

Flange Distance

Distance from the mounting flange (the „metal ring“ in rear part of the lens) to the camera detector plane.

F-Number, F#

The ratio of the focal length of a lens to the diameter of its aperture. It represents the size of the aperture relative to the lens’s focal length and plays a significant role in controlling the amount of light that enters the camera system.

Focal Length

The distance between the lens’s optical centre and the image sensor or the point where light rays converge to form a focused image. It determines the magnification and field of view of the captured image.

A shorter focal length results in a wider field of view but potentially smaller magnification, while a longer focal length offers a narrower field of view with the potential for greater magnification. The shortest focal length lenses have a “fish-eye” effect with a lot of optical distortion.

Frame Rate

The number of individual images or frames that the camera can capture per second, measured in frames per second (fps). A higher frame rate allows the camera to capture fast-moving objects.

Gg

Global Shutter

A type of camera sensor technology that allows all pixels in the image to be exposed simultaneously. A global shutter sensor reads an entire frame of an image at once, freezing the motion of objects in the scene simultaneously.

Global shutter cameras are particularly suited to applications that involve moving objects or fast-paced scenes, as they provide accurate and distortion-free images of dynamic environments.

Hh

HMI (Human-Machine Interface)

The interface that allows human operators to interact and communicate with machine vision systems, typically through graphical interfaces.

Homogeneity

The uniform distribution of light intensity, in which the intensity of light is consistent across the entire field of view.

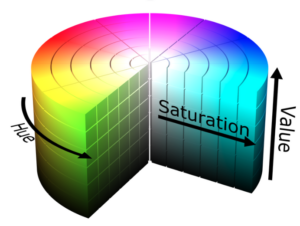

HSV Colour Space

A colour model that represents colours using three components: Hue (H), Saturation (S), and Value (V).

- Hue: pure colour information ranging from 0° to 360° on a colour wheel. Defines the colour’s dominant wavelength.

- Saturation: The intensity or purity of the colour, ranging from 0 (grey or unsaturated) to 100 (full colour saturation).

- Value: the brightness of the colour, ranging from 0 (black) to 100 (white).

Hyperspectral Imaging

Capturing and analysing images in a multitude of narrow and contiguous spectral bands, enabling the identification of materials based on their unique spectral signatures.

Unlike traditional imaging, which records colour information typically in three bands (red, green, blue), hyperspectral imaging divides the electromagnetic spectrum into numerous narrow bands, allowing for highly detailed spectral information to be acquired for each pixel in an image.

Ii

Illuminance

Measured in lux (lx), is the amount of luminous flux (light) incident on a unit area of a surface.

Infrared Light

Infrared light refers to electromagnetic radiation with wavelengths longer than those of visible light, typically ranging from approximately 700 nanometers (nm) to 1 millimeter (mm).

Infrared light is employed in machine vision for various purposes, including enhancing visibility in low-light conditions or complete darkness, detecting temperature variations in thermal imaging, and enabling specific material- or object detection based on their unique infrared signatures.

IR light is invisible to the human eye, so it is often used in applications where people are operating nearby. IR light can also transmit through materials such as opaque plastics and conveyor belts. This is due to the longer wavelengths interacting less with the material they pass through. Note: some cameras are less sensitive to IR light (see spectral range).

Internet of Things (IoT)

A network of internet-connected physical objects or „things“ embedded with sensors that collect and exchange real-time data with other devices and systems over the internet. This allows the devices to be remotely monitored, controlled, and coordinated, without direct human involvement.

Image Evaluation

The process of analysing and assessing the content and attributes of images captured by cameras or sensors.

Image Processing

A digital technique that involves manipulating and analysing digital images to extract useful information or perform specific tasks. It encompasses a wide range of algorithms and methods to modify, filter, and analyse images to obtain desired results.

Image Sensor

Semiconductor device that converts an optical image into an electrical signal. It captures light and generates digital representations of the scene, forming the basis for further image processing and analysis.

Ll

LED

Light Emitting Diode. Commonly used in machine vision due to their many advantages, including controllable intensity, low power consumption, and long lifespan.

Lens

Optical component used in machine vision systems to focus and manipulate light. Lenses are essential for capturing and forming clear images of objects for analysis and inspection.

They work by bending or refracting light rays to converge them onto an image sensor. Lenses can control the depth of field, magnification, and correct optical aberrations.

Line Scan

A method used in machine vision applications to capture images one line at a time, as opposed to capturing the entire image at once. A line scan camera contains a single row of pixels arranged in a linear array and captures each line of the image as it moves across the field of view.

Line scan cameras are commonly used in applications where objects are moving rapidly or when high-speed, high-resolution imaging is required, such as in web inspection, surface inspection, and printing quality control. These applications also require powerful and uniform lighting.

Longpass Filter

An optical device used to transmit wavelengths of light that are longer (lower) than a specified cutoff wavelength, while blocking shorter (higher) wavelengths.

Unlike bandpass filters that allow only a narrow band of light to pass through, longpass filters permit a broader range of wavelengths to transmit, effectively filtering out shorter wavelengths.

Low Angle Lighting

Lighting technique where objects are lit from a shallow angle relative to the camera’s optical axis, typically around 45°off-axis.

This method creates pronounced contrasts between raised and recessed features, thereby aiding in the accurate detection of defects, quality assessment, and precise surface inspection tasks.

See our range of low angle lights here.

Luminous Flux

A measure of the total amount of visible light emitted or radiated by a light source, quantified in lumens (lm).

Mm

Machine Learning

A subset of Artificial Intelligence (AI) that involves the development of algorithms and models enabling computer systems to learn from and make predictions or decisions based on data. It encompasses techniques where systems iteratively analyse and adapt to data, improving their performance over time.

Machine Vision

A wide-ranging term referring to the use of hardware components and algorithms to enable machines (typically sensors and cameras) to interpret visual information from their surrounding environment.

The use of machine vision is commonly found not only on production lines, but also in traffic and space applications, farming and agriculture, and many other fields.

Metrology

The science of measurement and accuracy. In the context of machine vision, metrology refers to the use of imaging and measurement technologies to measure objects and surfaces accurately and precisely within a scene.

Multispectral Imaging

Multispectral imaging captures information across a few specific wavelength bands, which can include visible light and selected portions of the infrared spectrum. While it provides less detailed spectral data compared to hyperspectral imaging, it offers valuable insights into specific features and attributes of objects and materials.

Nn

Neural Network

A type of Artificial Intelligence model inspired by the human brain’s structure and function. They consist of interconnected nodes, or „neurons,“ organized in layers that process and transform data. Neural networks can recognize patterns, make predictions, and solve complex problems across various domains, such as image and speech recognition, natural language processing, and decision-making tasks.

Oo

Object

Refers to the part or component within the scene which is to be analysed or detected.

Object Detection

The ability of a machine vision system to identify and localise instances of objects in an image.

Object Recognition

The ability of a machine vision system to identify and classify objects within an image based on predefined patterns, features, or characteristics.

Optical Character Recognition (OCR)

Software dedicated to processing images of written text into a computer-understandable format. It allows computers to recognize and extract text from images, enabling efficient data processing and manipulation.

Optical Character Verification (OCV)

A technique used to verify the correctness of printed characters or symbols on products. OCV systems ensure that printed text or codes match expected standards.

Optical Filters

Devices used to modify the spectral content of light by allowing certain wavelengths to pass through while blocking others. Filters can be used for colour correction, contrast enhancement, and more.

OverDrive

A specialized setting where the light source is momentarily driven with higher power than its rated output to obtain an increase in illumination intensity for a short period of time.

Overfitting

When a machine learning model learns to perform well on training data but fails to generalize to new, unseen data. For the opposite, see underfitting.

Pp

Part Localisation

Identifying the position and orientation of specific objects or parts within an image. This is useful for tasks such as robotic pick-and-place operations.

Pattern Recognition

The capability of a machine vision system to identify and differentiate specific patterns or shapes within an image, crucial for identifying objects or features.

PCB

Printed Circuit Board.

Photonics

An interdisciplinary field that studies the science and technology of light.

Photonics in machine vision encompasses the development and application of devices and systems like lasers, LEDs, lenses and sensors.

Pick-and-Place

A robotic or automated manufacturing process that involves the selection (picking) of objects from one location and the relocation (placing) of these into another location, often with precision and speed. By integrating machine vision systems, these processes gain the capability to visually perceive objects, their positions, and attributes, allowing for adaptive decision-making and precise placement.

Pixel

The smallest unit of an image, representing a single point of colour or grayscale information.

Polarization

The orientation of light waves in a specific direction within an electromagnetic wave. Polarized light is light in which the oscillations of the electric field occur predominantly in a single plane.

Polarizing Filter

A filter designed to enhance image quality and visibility by reducing glare, reflections, and unwanted light in scenes with varying levels of reflectivity.

It achieves this by selectively allowing specific polarized light waves to pass through the filter while blocking others.

Process Interface

The communication and integration between the machine vision system and other components or processes within a larger manufacturing or automation setup (e.g. PLC, sensors, process control).

QR Code

A two-dimensional matrix barcode that encodes information in the form of black and white squares. It is commonly used for storing data such as URLs, text, or other information that can be quickly scanned and read by devices with cameras or QR code readers.

QR codes are more visually intricate compared to datamatrix codes, featuring a distinct square pattern with alignment markers. This design enables QR codes to be canned from different angles and even if they are partially obscured or damaged.

Rr

Reflection

The process by which light incident on a surface is bounced back or redirected in different directions. The angle at which the light is reflected depends on the angle of incidence and the properties of the surface itself.

Reflection is a crucial aspect of machine vision, as it can be used to capture information about surface characteristics that can be beneficial in texture analysis and positioning/alignment tasks. However, if not properly managed, reflections can cause hot spots and glare, interfere with accurate measurements, reduce contrast and even lead to false defects.

Refraction

The bending of light as it passes through different materials with varying refractive indices. It occurs when light passes from one medium into another and its speed changes, causing the light rays to bend at the boundary between the two mediums.

Remission

The ratio of the light reflected by a surface to the light incident upon it. Also, the quantitative measure of how much light is reflected by an object compared to how much light it receives. Remission, also called reflectance, is often expressed as a percentage or a value between 0 and 1, where 0 represents complete absorption (no reflection) and 1 represents complete reflection (no absorption).

RGB

An additive colour model used in electronic displays, cameras, and lighting, in which the three primary colours (Red, Green, Blue) are used to represent and capture colour information. By combining different intensities of these three primary colours in each pixel, a wide range of colours can be displayed and analysed.

Ring Lighting

Positioning a circular arrangement of light sources, most commonly in the shape of a ring, around the camera. It provides even illumination from all directions, reducing shadows and glare, making it suitable for tasks like capturing texture and surface finish.

Find out more about ring lights here.

Rolling Shutter

A camera sensor feature that captures the image by scanning the scene sequentially, line by line, rather than capturing the entire image simultaneously.

This scanning process introduces a time delay between the exposure of the top and bottom portions of the image. As a result, in fast-moving scenes or when capturing rapidly changing subjects, the rolling shutter can lead to a distortion known as the „rolling shutter effect.“ This effect becomes visible when objects or elements within the scene are in motion during the exposure. In contrast, a global shutter captures the entire image simultaneously.

Ss

Scene

Refers to the specific area or environment being observed or analysed by the vision system. It encompasses all the objects, components, and features present within the field of view of the camera or imaging system.

Scheimpflug

Refers to the Scheimpflug principle: a geometric rule that describes the orientation of the plane of focus of an optical system (such as a camera) when the lens plane is not parallel to the image plane.

Segmentation

Dividing an image into distinct regions based on certain attributes, aiding in object detection and analysis.

The primary goal of segmentation is to separate objects or areas of interest from the background and from each other, enabling more focused and accurate analysis of individual components within an image.

Sensor Size

The physical dimensions of the camera sensor, affecting field of view and image quality.

SMD

Surface-Mounted Device. Refers to electronic components that are designed to be mounted directly onto the surface of a printed circuit board (PCB) without the need for leads or wires, which would be called “through-hole” components.

Spectral Range

The range of wavelengths of light that a camera or sensor can detect. It determines what types of light, such as visible, ultraviolet, or infrared, the system can capture.

Spot Lighting

Lighting technique that involves directing a concentrated and focused beam of light onto a specific point or region of interest within a scene or object.

It finds application in tasks such as highlighting features for detailed inspection, precise dimensional measurement, aiding barcode and text reading, guiding alignment and positioning tasks, detecting foreign objects, and facilitating vision-guided robotics for accurate object recognition and manipulation.

Find out more about our spot lights here.

State of Motion

The physical condition or configuration of an object or system regarding its movement or displacement. It encompasses characteristics such as speed, direction, acceleration, and position at a specific point in time.

Stereo Vision

A 3D imaging technique involving two or more cameras to capture multiple perspectives of the same scene, allowing for depth estimation based on disparities between corresponding points in the images.

Strobe Mode

Operating mode where the light source emits short bursts of intense illumination with precise timing. These bursts of light are synchronized with the camera’s exposure time, ensuring that the subject is illuminated only when the camera is actively capturing an image. Strobe mode is commonly used to freeze motion, reduce motion blur, and enhance the clarity of fast-moving objects or scenes.

Structured Light

A 3D imaging technique used to capture the three-dimensional shape or topography of objects or scenes. It works by projecting a known pattern of light onto the object or scene and then analysing how that pattern distorts due to the object’s surface geometry.

Tt

Telecentricity

Refers to the property of a telecentric lens to keep optical rays parallel to the optical axis.

Thresholding

Image processing technique converting an image into binary form by classifying pixels as either foreground or background based on intensity.

Time-of-Flight (ToF)

In machine vision, ToF refers to using sensors that measure the time it takes for light or other signals to travel from the camera to an object and back to determine distance.

ToF sensors are used in various applications, such as goal-line technology in football, tennis hawk-eye systems and the sensors that allowed the creation of the 3D Google Maps function.

Traceability

The ability to track and record the history, location, and movement of products and components throughout the manufacturing and distribution process using identification techniques.

Transmission

The process by which light passes through a surface without being significantly absorbed or reflected. When light encounters a transparent or translucent material, a portion of it can be transmitted through. The amount of light transmitted depends on the properties of the material, such as thickness, and the wavelength of the light.

Uu

Ultraviolet (UV) Light

A form of electromagnetic radiation with shorter wavelengths than visible light, typically ranging from about 10 nanometers (nm) to 380 nm.

UV light is invisible to the human eye but has numerous applications in machine vision, including fluorescence inspection, security mark and tax stamp verification, surface inspection and contamination detection.

UV light is categorised into three regions: UVA (315-400 nm), UVB (280-315 nm), and UVC (100-280 nm).

Underfitting

When a machine learning model is too simple to capture the underlying patterns in the data. Unlike in overfitting, underfitting results in poor performance on both the training data and new data.

Ww

Wavelength

The spatial distance between successive crests (or troughs) in an electromagnetic wave. The wavelength of light determines its colour and the type of radiation it represents.

Visible light falls within a wavelength range spanning from 380 to 780 nanometers (nm), with ultraviolet spectrum covering wavelengths below 380 nm and infrared spectrum encompassing wavelengths exceeding 780 nm.

Working Distance

Distance (usually expressed in mm) between the camera lens or imaging sensor and the object being inspected or imaged.

It can also refer to the distance between the object and the lighting source. It is rare in machine vision applications that the camera and light are positioned at the same working distance.